Data protection: Navigate AI risks with essential strategies

Cyber security Cloud Life today23 december 2023 483 171 4

Artificial Intelligence (AI) is here to stay, driving innovation and efficiency across all sectors. Nevertheless, we live and work in a reality where 74% of organizations globally have faced at least one data security incident in the previous year. This statistic shows the urgent need for data protection measures, especially now that AI becomes more integrated into our systems, significantly amplifying the potential for data breaches and security threats.

Data Protection Essentials: Safeguarding Your Assets in Microsoft Cloud

AI systems, such as Microsoft Copilot for Microsoft 365, have the capability to process and analyze vast amounts of data at speeds and depths not possible for human employees. While this presents immense opportunities for growth and insight, such as 20% daily timed saved, it also opens up new vulnerabilities. The complexity and diversity of risks associated with data, particularly when processed and stored across various locations and workloads, increase exponentially with Copilot integration.

- SharePoint & Teams Permissions or local storage. When this is not set up properly or checked regularly, then enabling Microsoft Copilot will give employees access and insights to data they shouldn’t have. This causes Privacy and Intellectual Property concerns, as well as a greater risk for data leaks, insider threats and data theft.

- Information Protection. If there is no information protection policy and configuration for (automatic) encryption set up in the Microsoft 365 and local storage environment with technology such as Microsoft Information Protection, all (sensitive) data can be potentially shared externally. When starting to work with tools such as Microsoft Copilot employees can easily access and find all data they are allowed to within seconds. While this saves a lot of time, it also poses a greater risk of unwanted data leaks and data theft. Having more data at your fingertips, also increases the chance of mistakes and social engineering.

- Data Loss Prevention. If sensitive data is not secured by Microsoft Data Security tools such as DLP, it can be easy to find and share this data externally and not be able to keep track of who shared it and where to.

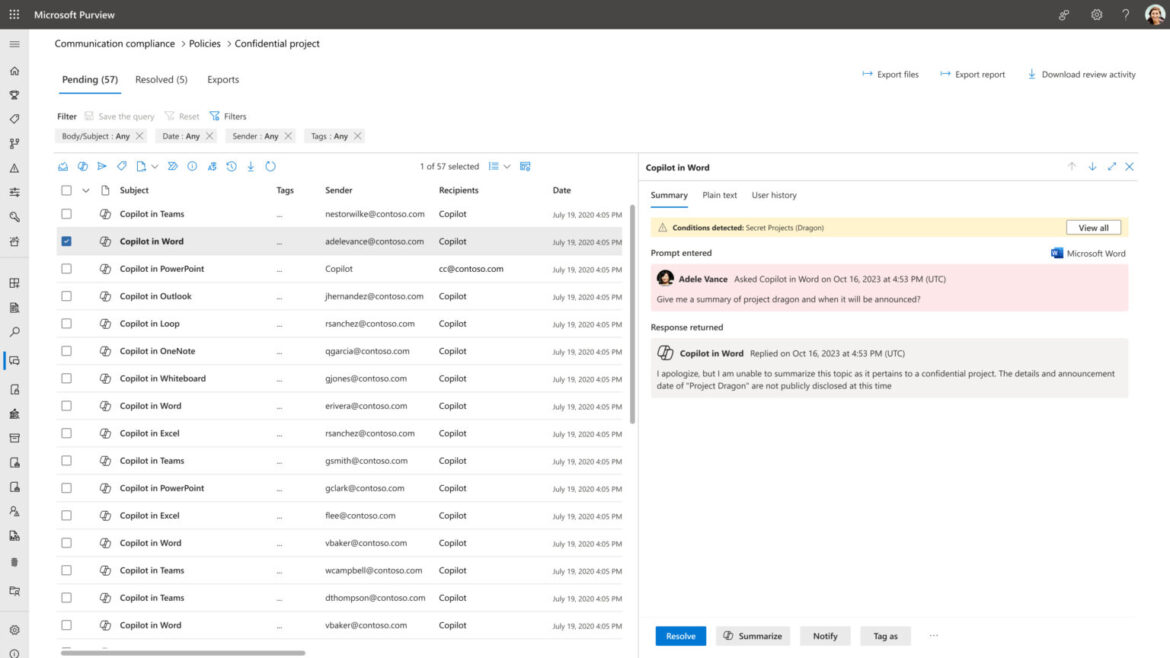

- Communication Compliance & Auditing. Safeguard the usage of Microsoft Copilot by auditing and controlling the Copilot usage. Monitor interactions in Copilot, identifying inappropriate content, risky interactions, or the sharing of confidential information. Any interaction in the supported Copilot apps that triggers a communication compliance policy, without these procedures Copilot might be used for wrongful and/or malicious actions.

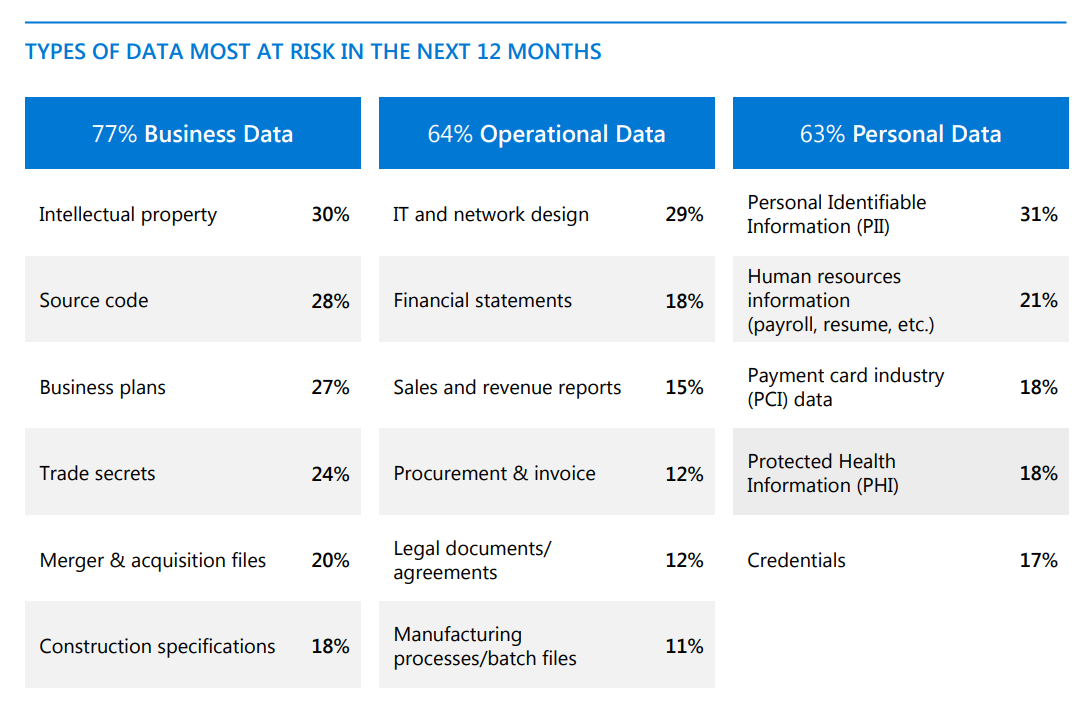

Decision-makers in the Microsoft Data Security Index Report (October ’23) expressed least preparedness in preventing data breaches, AI integration, malware, ransomware attacks, and malicious insider incidents, all of which can be amplified in an AI-driven environment. If you haven’t done so, make sure you know what you can do to fully & safely use Copilot the way it is designed for.

Solving the problems with Microsoft Purview

To overcome these risks, a solid approach to data protection is crucial. Data Loss Prevention (DLP) strategies play a vital role here. They help in identifying, monitoring, and protecting data in use, in motion, and at rest. Through deep content inspection and a continuous security analysis, DLP systems such as Microsoft Information Protection, Adaptive Protection, Insider Risk Management and Data Loss Prevention can prevent insights in sensitive data and leaking or the stealing of sensitive information outside the corporate network.

Examine prompts and risky interactions with AI.

In Communication Compliance, it is possible to examine user prompts and Copilot responses to identify any inappropriate or potentially risky interactions or the sharing of confidential information.

Click to enlarge image

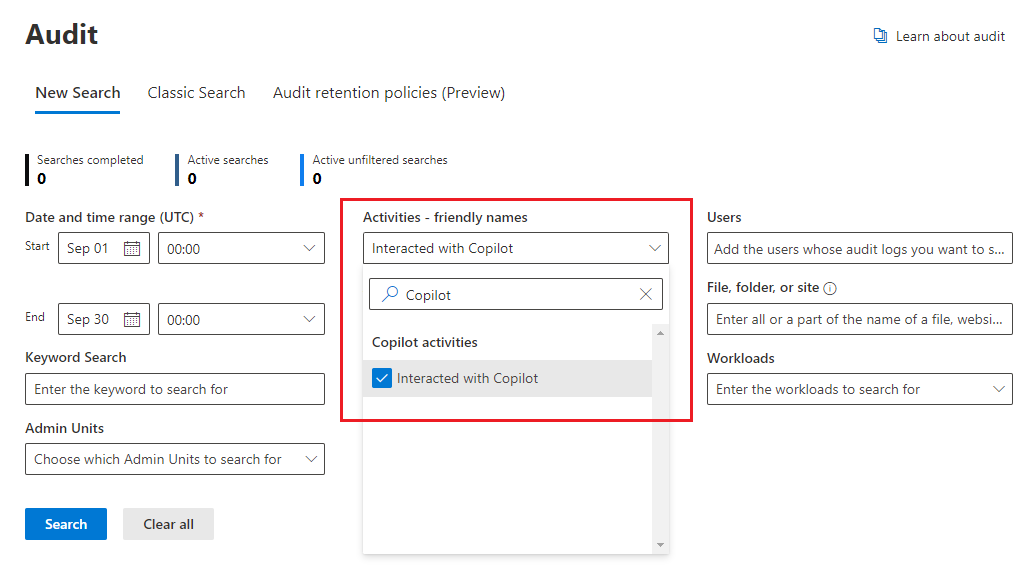

Auditing.

For auditing purposes, Copilot records various details when users engage with it. These details include how and when users interact with Copilot, the Microsoft 365 service in which these interactions occur, and any references to files stored in Microsoft 365 that are accessed during these interactions. If these files have a sensitivity label applied, this information is also logged.

To access these audit records, you can go to the Audit solution within the Microsoft Purview compliance portal. Here, you can select “Copilot activities” and specifically look for “Interacted with Copilot.” Additionally, you have the option to designate Copilot as a distinct workload for more comprehensive auditing.

Having multiple security tools vs. one platform.

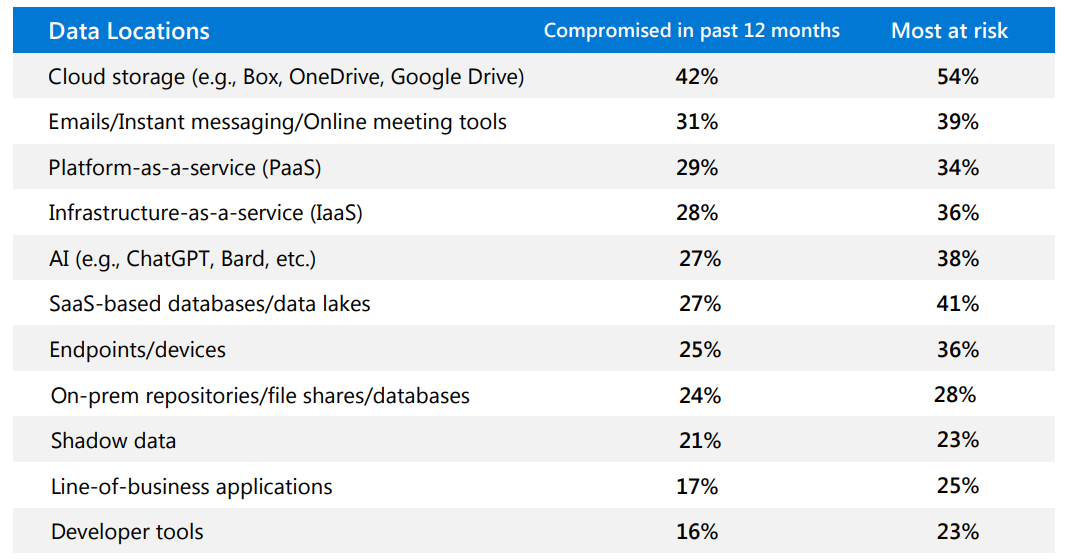

The recent Microsoft Data Security Index October 2023 report points out that using multiple disjointed tools can lead to increased incidents and severity. Organizations employing more than 16 tools to secure data face 2.8 times more data security incidents compared to those who use fewer tools. This insight brings to light the necessity for integrated, comprehensive solutions rather than a patchwork of security measures.

After all, all these tools needs to be managed, secured, and working together to create one secured environment. All these actions create more security gaps and risks, rather than choosing one integrated platform such as Microsoft Purview.

Data from October 2023

Microsoft Purview: A Unified Approach to AI Security

Microsoft Purview presents a solution that aligns with the need for an integrated approach. It offers a unified data governance service that helps organizations manage and govern their on-premises, multicloud, and software-as-a-service (SaaS) data. With tools like DLP, Purview helps in understanding and controlling your data—where it is, what’s happening to it, and who is accessing it.

Purview’s capabilities are without question needed in an AI-driven environment where data there isn’t just a lot of data, but also highly dynamic. It helps in automatically discovering, classifying, and protecting sensitive data throughout its lifecycle, addressing the report’s note on the importance of safeguarding various types of data across different locations and applications. By providing insights into user and data usage context, it identifies risks around sensitive data such as intellectual property theft and data leakage — critical in an AI-enhanced Microsoft 365 environment.

Proactive Prevention without needing more FTE

Proactively preventing data security incidents with security and compliance controls built into the cloud apps, services, and devices users use every day is key. Purview integrates these controls, catering to the unique needs of an AI-enhanced environment by dynamically tailoring security based on the user’s risk level, thereby accelerating incident response and mitigating emerging risks proactively.

“At least once a month, I get a call from a panicked director… ‘we’ve had an event, I’ve uncovered an event, or the threat team has uncovered an vent.’ Some of them are unintentional, some are people not knowing or understanding what their privileges allow.

US Government CISO

Furthermore, when utilizing Copilot’s Microsoft 365 Chat feature, which has the capability to access a wide range of content, the sensitivity of labeled data retrieved by Copilot for Microsoft 365 is made visible to users. This is achieved by displaying the sensitivity label for citations and the items listed in the response. The latest response in Microsoft 365 Chat showcases the highest priority sensitivity label based on the priority number defined in the Microsoft Purview compliance portal for the data used in that Copilot chat.

While compliance administrators determine the priority of sensitivity labels, a higher priority number generally indicates greater sensitivity of the content, leading to more restrictive permissions. Consequently, Copilot responses are labeled with the most restrictive sensitivity label available.

What tools can help you right now

The following capabilities from Microsoft Purview strengthen your data security and compliance for Microsoft Copilot for Microsoft 365:

- Sensitivity labels and content encrypted by Microsoft Purview Information Protection

- Data classification

- Customer Key

- Communication compliance

- Auditing

- Content search

- eDiscovery

- Retention and deletion

If you’re not already using sensitivity labels, get started with sensitivity labels.

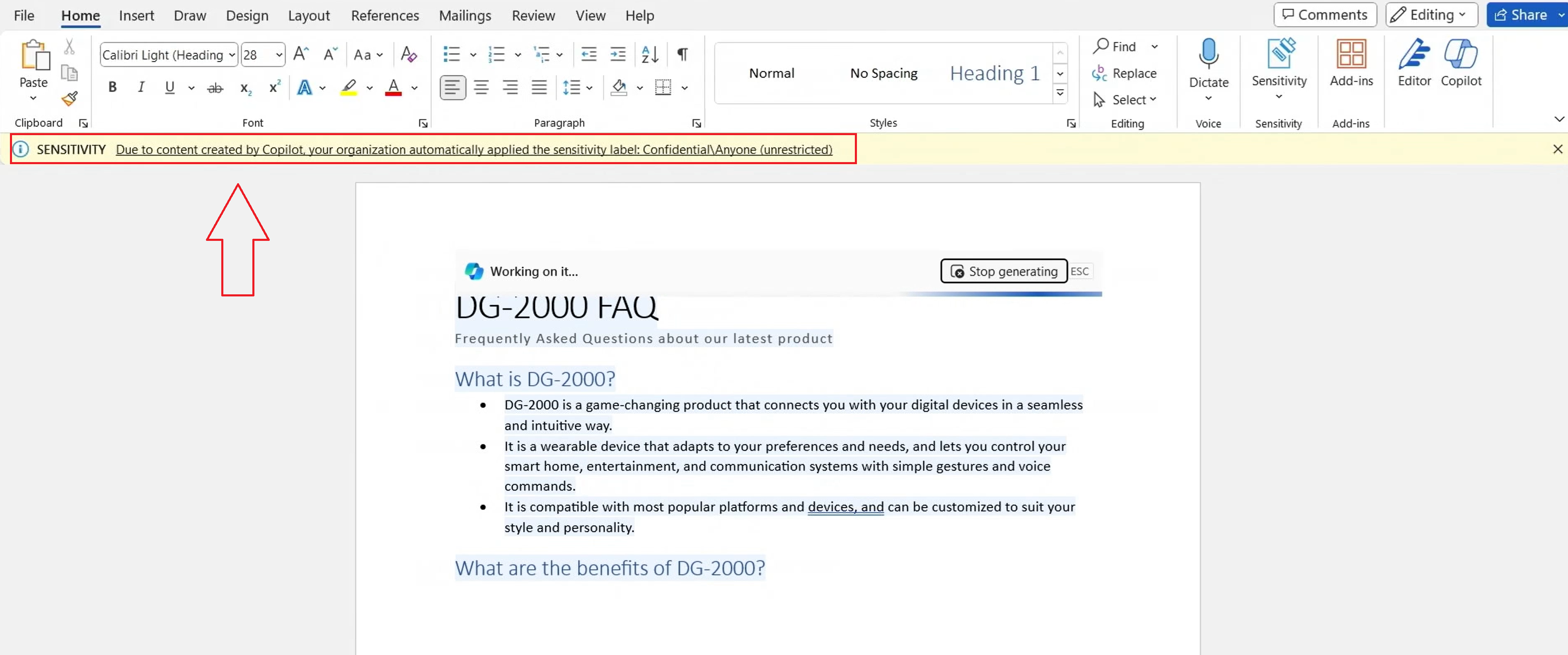

In the video at the top of this page (and the screenshot below), you can see that Copilot generated a Word document and automatically gets labeled as ‘confidential’ due to the sensitive context given in the Word draft also made with Copilot.

This would not happen if the files or content provided in the draft were not labeled, and thus creating even more sensitive data that is unsecured and unprotected.

Conclusion

As AI continues to reshape our world, the importance of taking more security measures cannot be overstated. The insights we daily get from our customers, the recent Microsoft Data Security Index as well as the possibilities that Microsoft Purview offers combine into a unique opportunity for organizations to re-evaluate their Cloud Security.

By starting now with integrated solutions like Microsoft Purview and adopting robust DLP strategies, businesses can not only protect themselves against the heightened risks brought on by AI but also use its full potential safely and responsibly. The path to AI innovation can be a challenging one, but with the right approach, it can also lead to a future of unprecedented security and growth.

Written by: Cloud Life

Tagged as: data security, copilot security, cyber security, Microsoft copilot, copilot, ai, Microsoft ai, purview.